Basic artificial intelligence (AI) is no longer the domain of science fiction, as evidenced by the buzz surrounding machine learning, neural networks, and data mining techniques. The support infrastructure and readily available software make it easier to practically employ these powerful techniques in everyday problems. This article gives an overview of how the new AI capabilities can be used in products today.

How machine learning works

In many ways, machine learning is not all that different from traditional statistical techniques used to make sense of raw data used for over a century. Both take some observations from the world and use that data to make predictions of what that information represents. These can be classification problems, such as disease diagnosis, and determining whether a piece of email is spam, or a regression problem, such as trying to come up with a number that represents the odds of something occurring, like the chance someone will default on a loan.

There are a number of techniques used in in modern machine learning, but they typically follow the same basic pattern: We have a number of factors that when combined, can be used to predict some result. We collect a number of examples, called a training set, each of which contains the factors and importantly, the actual outcome that we want to predict in future examples (when that outcome is not yet known).

The flu or just a cold?

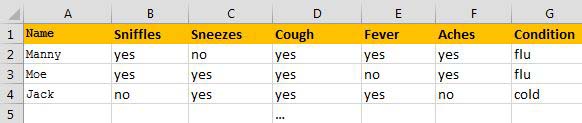

Suppose we want to identify whether someone has a cold or the flu from the symptoms he or she might present to their doctor. We first identify a number of factors that are likely to indicate both conditions; sniffles, sneezing, fever, etc.. Then for a large group of people that we have already diagnosed as having one of the other conditions, we collect information about all those factors and put them into a spreadsheet like this:

The idea is that we are creating a set of data that has a list of patients, their symptoms, and what condition they had, as judged by an expert (their doctor in this case). The larger the collection the better, but good results can be had with only a few hundred cases. We then use 90% of this set to train machine learning software to “understand” how much each of the individual symptoms contributes to the diagnosis.

Once the system is trained, we then put the remaining cases (where we know the real diagnoses, but don’t include the condition factor to the system) and see if the system can come up with the right answer. If it does, we now have a software system capable of predicting whether one has the flu or a cold based on their symptoms.

Conclusion

Machine learning is useful anytime you need you need to classify or rate something based on a number of “fuzzy” factors, as long as you have a large enough collection of sample cases to train the system on. There are many free and open-source software tools that make the process fast and easy.

Machine learning resources

There a large number of free and open-source tools for easily using machine learning in your own applications:

- Google researchers Martin Wattenberg and Fernanda Viégas have a great interactive demo that shows how a simple neural network works. http://playground.tensorflow.org

- TensorFlow by Google is a powerful collection of machine learning tools in an open-source library. It is accessible through Python and other languages. https://www.tensorflow.org

- Weka is a Java-based tool developed by Machine Learning Group at the University of Waikato in New Zealand that can be used as a stand-alone program, or embedded into your own web-based application. http://www.cs.waikato.ac.nz/ml/weka

- Wekinator is a great interactive Weka-based tool created by Rebecca Fiebrink that make it easy to try out machine learning in real-time applications. http://www.wekinator.org

Online courses on machine learning

There are many online courses available to learn about machine learning. Most are free.

- Coursera’s Machine Learning course, taught by Stanford CS professor Andrew Ng is a solid overview of machine learning. He is an excellent instructor, but since it is a Stanford CS class, it is very math and theory heavy. https://www.coursera.org/learn/machine-learning

- Machine Learning for Musicians and Artists is a good introduction to machine learning for anyone even if you are not an artist. The course’s thorough, well-taught and low-math approach nicely walks the line between giving enough information to really understand the basic ideas without being overwhelmed with detail. https://www.kadenze.com/courses/machine-learning-for-musicians-and-artists

About Bill Ferster

Bill Ferster is a research professor at the University of Virginia and a technology consultant for organizations using web-applications for ed-tech, data visualization, and digital media. He is the author of Sage on the Screen (2016, Johns Hopkins), Teaching Machines (2014, Johns Hopkins), and Interactive Visualization (2012, MIT Press), and has founded a number of high-technology startups in past lives. For more information, see www.stagetools.com/bill.